Top 10 reasons to choose Cisco ACI for your Data Center Network (Part 2 of 2)

In this part 2 of this blog series, we will cover all the major features of ACI in detail. Have you read the overview of CISCO ACI for your Data Center yet?

Simplified Automation with Application-Driven Policy Model

Think of a Data Center with 50 Leaf Switches and a pair of Spine Switches, your job is to implement the network from scratch with brand new devices (Non-ACI, traditional network) with all the VLANs, IP Routing, VRFs, switchport configs, and ACLs. How many days can you think of to configure this Network? I believe with a traditional network approach, it will take around 1 month for 1 engineer approximately considering setting up all devices manually with high-level following activities.

- Upgrading OS to the recommended version on all switches

- Configuring L2 Switchports with Trunk and Access Configuration

- Configuring L3 Routed interfaces with IP Addresses

- Configuring L3 Routing in fabric

- Configuring ACLs if required

- Configuring VRFs for macro-segmentation

- Configuring Port Channels if required

- Configuring Underlay and Overlay configs

Now, what if I tell that you that this deployment can be automated and can be done within 3 to 5 days with Cisco ACI Solution? Isn’t it cool?

In Cisco ACI, you set up an APIC Cluster first, discover the switches, provision the fabric, and create Policies as per your requirements from one single dashboard, no need to go to every rack and configure all the devices manually.

Elimination of Layer 2 Flooding in Fabric

In traditional networks with collapsed core architecture, we have L3 IP gateways on the Core Switches and Leafs/Access Switches works in complete Layer 2. The challenge comes in a big network where you have a good amount of Access/Leaf switches and from every single host, L2 Broadcast, Unknown Unicast, and Multicast (BUM) traffic gets flooded to all the switches and endpoints in the Broadcast domain. Moreover, Spanning Tree also has its complications and not lets you use all Physical links in an ideal fashion.

How does ACI solve this problem?

Thanks to Cisco ACI, ACI’s architecture is based on Spine-Leaf (CLOS) model where Equal Cost Multipathing (ECMP) is used between the Spine and Leaf switches, thus providing IP redundancy across the entire fabric. ACI uses Layer-3 Routed links between Spines and Leaf’s connectivity with underlay Layer-3 Routing using one of the best link-state routing protocol “IS-IS” which helps in using ECMP between the links. Every Leaf forms an IS-IS neighborship with all the Spines to advertise their loopbacks and set up an Underlay. By using Layer-3 Routed links, it automatically eliminates the scope of Layer-2 flooding between the Spines and Leafs. For Overlay, ACI uses VXLAN to forward data between any device within the fabric.

Workload Mobility

Ever Since VMware came into the picture, it has transformed the industry. Earlier in the’90s, we used to have all the Physical/ Bare-Metal workloads. Now, the Data Centers have been mostly using Virtual workloads. A key benefit of using the VMware Virtualization Platform is the ability to move Virtual machines (VMs) among data centers while the Virtual machine is running. This feature is called stateful or live vMotion. To support this feature VM must always remain in the same native IP Subnet so that it guarantees network reachability from VM to all the users and other components in the rest of the network.

The challenge is in IP Subnet limitation, it limits the VM mobility domain to the cluster of VMware servers whose networks are on the same subnets. For example, If an administrator wants to move a VM to an underutilized server, he has to ensure that vMotion won’t break the VM’s network connections in place. For a small number of servers, it isn’t a problem but as the number of VMs grows, the administrator will run into IP subnet roadblocks that limit vMotion which means a Scalability issue. Here Comes the Solution- VXLAN!!

VXLAN is a standard solution that provides a layer 2 tunneling feature that overcomes IP Subnetting limitations. It helps administrators to move VMs to any server within the Data Center, regardless of different IP subnets being used in the network. In a Layman term, VXLAN provides a way to tunnel multiple Layer 2 subnetworks across a Layer 3 IP infrastructure. It makes 2 or more Layer 3 network domains connected and makes them look like the same layer 2 domain which allows VMs on different subnets to communicate as if they were in the same layer 2 networks.

Cisco ACI leverages VXLAN in its fabric with integrated underly and overlays features. You can configure a VXLAN fabric on Non-ACI solutions as well but what makes ACI special is that it automates the entire underlay configuration on all the switches which gives you the relief of not configuring VXLAN manually on all switches. Not just VXLAN, you need underlay routing, multicasting to support BUM traffic forwarding, Control Plane to look for remote VTEP, etc. All these parameters get configured by Cisco APIC automatically which truly saves your life. If you want to dig deeper into VXLAN, you must refer to RFC 7348 to understand more about VXLAN Headers and their encapsulation.

Centralized Visibility with Real-Time Health Monitoring

Cisco ACI makes life easier when it comes to monitoring your network. Below mentioned are the features that Cisco ACI provides.

- Health Scores – Score is calculated out of 100, it helps you understand in which Tenant, VRF, Switch, switchport the issues are. Issues can be a Link down, Power Supply failure, configuration error, etc.

- Unified Topology– where you can track all your switches connectivity, their health, links connected, etc.

- Endpoint Tracker– We discussed in the previous section about workload mobility, now if you want to know that this XYZ VM is connected to which Leaf switch, you can simply search for that VM via its IP or MAC in EP Tracker and you will get to know where the VM is currently connected to and its EPG.

Scalable Performance and Multi-Tenancy

Scalability

Cisco ACI Solution is built in keeping scalability in mind. Cisco ACI uses Clos architecture which is designed around the spine and leaf architecture in which every Spine is connected to every Leaf. You can add more Leafs and Spines anytime without disrupting any traffic.

Cisco ACI supports up to 200 Leafs and 6 Spines per pod with a maximum of 7 nodes APIC Cluster. Here, you can read about the scalability guide, which you can refer to for maximum limits in the Cisco ACI solution supported to date.

Multi-Tenancy

Cisco ACI Solution has been designed from the beginning to be “multi-tenant”. In a Layman term, Multi-tenancy means different things to different people (similar to the cloud). In the case of a cloud service provider, a tenant is a unique customer but for a typical enterprise customer, a tenant could be an operational group, business unit, application vendor, and so on. In traditional networks, making a routing protocol change on a Layer-3 device could potentially affect hundreds of unique subnets/domains. This makes it more complicated to make any change in the Core devices in traditional network environments. Thanks to Cisco ACI Policy Model, the Tenant in the ACI policy model, gives us the ability to create a high-level object, inside which you can make VRFs, IP Subnets, Policies, etc.

Microsegmentation

Microsegmentation is the capability to isolate the traffic within an Endpoint Group or we can say within a VLAN (similar to Private VLAN) and to segment the traffic based on Workload’s properties like its IP Address, MAC Address, and so on. Let’s take an example, there are multiple VMs in the same subnet or a VLAN, and out of which selected 2 VMs are supposed to talk to each other, rest should not talk to each other. How can we meet this requirement in traditional network environments? I can think of VLAN ACLs and Private VLANs. But Is it really easy to configure/manage such VLAN ACLs or private VLANs in a Data Center with around 50 Leaf switches and a possibility of VM mobility? No, at least for me. The chances of misconfiguration are very high.

That’s where ACI solves this problem with Microsegmentation, we can enable Microsegmentation with ACI and it will not let any workloads/physical nodes communicate with each other without explicitly permitted in the contract. Also, it will apply to all the respective leafs, so you need not worry about workload mobility across anywhere in DC because the microsegmentation is enforced across the fabric with required policies.

Take help if you already have an ACI deployed in your environment and you want to know how to configure microsegmentation

Third-Party Integration

L4-L7 Service Insertion

There are certain use cases where you can integrate your Cisco ACI with other 3rd party components for unified policies and configuration. To understand such use cases, let’s first understand the challenge in traditional network environments.

Let’s suppose, we have a Data Center environment with network switches, servers, security firewalls, Load Balancers, and other respective components. A requirement could be like, For App servers to communicate with DB Servers, traffic must pass through Firewalls. If you want to achieve this requirement in traditional network environments, you can have separate VRFs, App Server and DB servers must be indifferent subnet/VLAN. P2P links in both VRFs to connect with Firewalls and accordingly you can route the traffic from each VRF to Firewall. Now the challenge is that Firewall Admin has to configure separately, Firewall Admin may use different Vlan or IP as he/she was supposed to do. Moreover, if the application is required to be load balanced via an external Load Balancer then the Load Balancer admin has to configure the network settings separately on LBs. If the Firewall or Load Balancer is deployed as a VM on the hypervisor, then the Virtualization Admin also has to configure the correct VLANs.

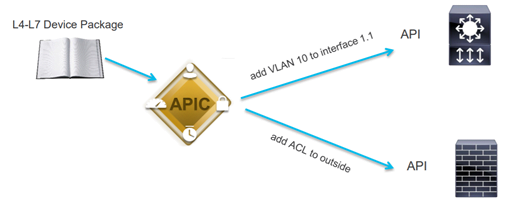

Cisco ACI provides a solution by integrating Firewalls and Load Balancers with Cisco APIC to L4-L7 Service Insertion. Once you do Service Insertion/integration, you can create Policies, templates, and provision the contracts between Workloads which will automatically provision the required VLAN, ACL, etc to the 3rd party devices.

Have a look below to understand better.

Following are the benefits of using this feature as follows.

1. Install once- deploy multiple times

2. Configuration templates that can be reused multiple times

3. Collecting Health scores from the 3rd party devices

4. Collecting statistics from the 3rd party devices

5. Updating ACLs and Pools on 3rd party devices automatically with endpoint discovery

Virtual Machine Manager (VMM) Integration

It is another very common use case of 3rd party integration of Cisco APIC with Virtual Machine Manager. You can think of a situation when Network Admin is fighting with VMware Admin when the VM is not reachable. The user is trying to ping the VM and the VM is not reachable, both admins are arguing and saying that they have done the configuration right but still it is not working, I have seen this situation. There was a very small misconfiguration due to which that issue happened, ok I will tell you what the problem was. Network Admin wanted that VM to be a part of a default VLAN which was 1 in Cisco switch and Network Admin told VMware Admin to provision the VM with VLAN 1 (also known as Portgroup in VMware world). Network Admin thought that when the VM will send the traffic to the switch, traffic will come untagged on a trunk port and the Cisco switch will automatically consider it as a native VLAN. Here comes the twist, the VMware tags the traffic if you use VLAN 1 in the port group on the VMware side, then Hypervisor will send traffic with a VLAN Tag 1. If you want untagged traffic to be sent from VM, then you need to use VLAN 0 in the port group.

We hope you understood such a small misunderstanding can create an issue, at that point, we realized that there should be a bridge between Physical Network Switching world and Virtualized world. That’s where Cisco ACI helps you out, you can integrate Cisco APIC with the supported Virtualization Managers like VMware VCenter (in VMware Vsphere environment) and Microsoft SCVMM (in Microsoft Hyper-V environments).

Let’s take an example of VMware VCenter, Cisco APIC integrates with VCenter, makes a connection with VCenter, creates an APIC controlled Distributed Virtual Switch (DVS) automatically at the Vsphere layer. In Cisco APIC you will define the VLANs for your EPGs and it will be created automatically on this DVS. VMware Admin has to just map the VMs with the correct port group.

Image Management

In Cisco ACI, you don’t have to manually upload the OS image to all Switches for upgradation. You can simply upload the image in Cisco APIC and can update your ACI-OS on switches from Cisco APIC. Generally in Data Centers, most of the devices like Servers, Security devices, Routers are connected Dual-Homed to 2 Leaf Switches. So the ideal way is to upgrade the Switches in Odd-Even fashion like you can update the ACI-OS on the Odd Leafs first, once they are updated then you can update the even Leafs. Similarly, you can update the Spine Switches. It will help you to avoid Traffic Disruption.

ACI Inventory and Configuration

Another cool feature is that you can replace the Switch and provision the switch without being worried about the configuration of the Switch. All the Configuration is on Cisco APIC. Let’s suppose one of your Leaf got faulty, you got the device replaced from the OEM. Now the Brand new device is delivered to your location and you need to manually configure the device by taking console and provision the configuration. If in case you missed taking backup of the previous switch, you are in trouble, my friend. Cisco ACI makes your life easier, there is a centralized config available on APIC for all switches based upon the policies you have configured on APIC.

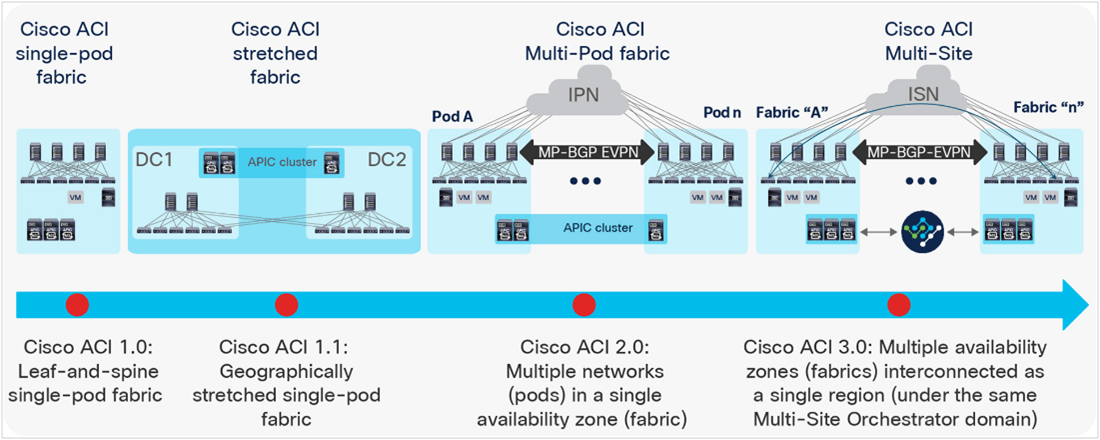

Deployment Modes- Unified Control for Multiple POD/Fabric/Sites/Clouds

Cisco ACI provides you many options to stretch your fabric. If you have multiple Spine-Leaf Architectures deployed nearby may be in the same building or the same campus and you want to stretch your VLANs, IP Subnets, VRFs between those 2 networks then you can use Multi-Pod Architecture. But if you have 2 different Sites, maybe DC and DR which are at 2 different locations, having IP reachability via MPLS or other WAN links, then you can choose Multi-Site Deployment where you can have common configurations in both the sites, will support Layer 2 stretch between those 2 locations using VXLAN. This Layer 2 extension can be created with or without flooding of multi-destination traffic.

With Multi-Site, a new component is required called Multi-Site Orchestrator which is a cluster of Virtual Machines and serves as a centralized point for monitoring and configuring multiple sites fabric at once. Multi-Site ACI deployment also supports disaster recovery situations where IP mobility across multiple sites is required. This deployment feature lets you configure the same IP subnet in multiple sites without Layer-2 flooding across those sites.

If you are confused about whether you should choose Multi-Pod or Multi-Site architecture, then the answer depends upon your use cases and the nature of connectivity between the locations. For Multi-Pod deployment, the Pods must have less than 50ms of latency between them. Use Cases can be within the same building or same campus. But if you have 2 different locations and the latency can be near or more than 50ms, the Multi-Site would be a better choice. If data protection is one of the requirements between 2 locations, then you should consider the Multi-Site deployment option which can encrypt the traffic optionally using Cisco’s Cloudsec technology.

Conclusion

Cisco ACI has been a transformational Solution that has brought Data Centers all across the globe into the world of Software Defined Networking. The problems of scalability, VM Mobility, flooding, Spanning tree and many more issues are solved by ACI. It also helped in reducing OPEX and simplifying Operations.

Want to go with ACI?

If you are planning for a Tech Refresh, Migration from your legacy Network to ACI, or for implementing advanced features in your already deployed ACI Fabric. You can always reach out to us, the team at Zindagi Technologies consists of experts in the field of Campus/Data Centre technologies, Service Provider Networks, Collaboration, Wireless, Surveillance, private cloud, public cloud, Openstack, ACI, storage, and security technologies with over a 20 years of combined industry experience in planning, designing, implementing and optimizing complex Data Centre and cloud deployments.

We will be glad to help you.

For any inquiries, please reach us by email at [email protected] or visit https://zindagitech.com/. Let’s hear from you at +919773973971.

Author

Harpreet Singh Batra

Consulting Engineer- Data Center and Network Security