Types, Methods & Algorithms to Load Balance Traffic

Load Balancers have become an essential requirement for any Enterprise and Cloud architectures over the years and provide an optimized solution for distributing the traffic loads between the Severs and applications.

In the previous post, we had discussed the introduction of Load balancers and the need to understand how they can be an integral part of the infrastructural solution to the customers.

In this blog, we will discuss understanding available types, methods & algorithms of load balancers that would be suitable and feasible as part of solution requirements.

General Problems Of A Customer

The web application hosted by an enterprise is currently housed on a single application server. As the users expand using the web application, network usage is required to get increased which results in customers buying more high-speed servers to host the same application, to meet the requirements of each user without overloading the servers. It is not certain that which server is serving the highest and efficient load of traffic.

The network administrator in such cases is required to make sure that load balancing of traffic should meet many different application delivery needs. So, it is very important to understand those needs to choose from the types, methods & algorithms of load balancing.

Types of Load Balancers

A Network Load Balancer is a Layer 4 (TCP/UDP) based load balancer that mainly performs to distribute traffic based on IP address and port numbers. This type of load balancer is designed to forward traffic to the appropriate and available servers using various load balancing algorithms based on Source/Destination Ports, Source/Destination IPs, Protocol, and sequence numbers in the header. Traffic received will be routed to the targeted server without inspecting the traffic.

An Application Load Balancer, on the other hand, works at layer 7 and is designed to consider the distribution of requests based on multiple variables such as content type, cookie data, custom headers for different applications hosted on the target servers. An application load balancer examines the traffic and determines the availability and behavior of the application which helps to choose from applicable load balancing algorithms.

Methods of Load Balancing

Static Load Balancing algorithms are generally based on the availability of the target servers and independent of the actual current system performance state to make load balancing decisions and traffic is mostly equally divided amongst them.

Dynamic Load Balancing keeps a regular check on the performance and availability of each server at the same time and sets the preferences dynamically of load balancing that facilitates in adjusting the distribution patterns of the traffic on the system.

Static Load Balancing Algorithms

There are two types of static load balancing algorithms Round Robin and Ratio/Weighted Round Robin.

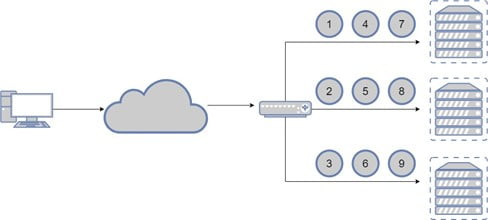

Round Robin

- Client requests are evenly distributed across multiple servers.

- Easy to configure and implement.

- Traffic is passed to the next available server in succession.

- Once all servers have an equal number of connections, the next connection loops back to the first server.

- Used when the servers are equal in capacity in terms of CPU, Memory, etc.

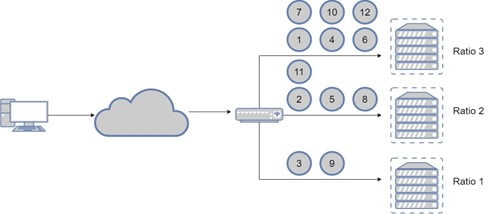

Ratio/Weighted Round-Robin

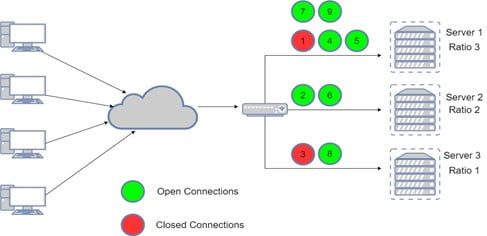

- Distributes connections among servers in a static rotation according to ratio weights statically defined for each server.

- Distribution is based on user-specified ratio weights proportional to the traffic handling capacity of the servers.

- Used when the servers are disproportionate with respect to processing speed and memory capacity.

Dynamic Load Balancing Algorithms

The types of Dynamic Load Balancing Algorithms are Least Connections, Weighted Least Connections, Fastest Response Time, Observed, Predictive

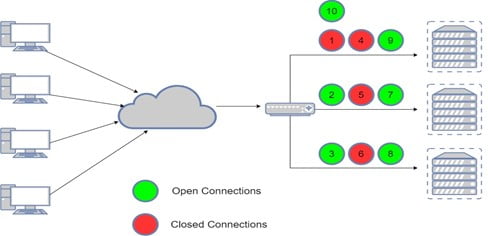

Least Connections

- Distribution is done based on the connections that every server is currently maintaining.

- Incoming requests are routed to the server with the least number of concurrent sessions.

- The server with the least number of active connections automatically receives the next request.

- This is to ensure that no server is left idle, and anyone or two servers are receiving all the traffic load.

- This is used in the scenarios where Servers have similar capabilities, but the session time used by any client on one server is higher than the session time on another server.

Weighted Least Connections

- Like Least Connections, distribution of traffic is done based on the least number of active connections but at the same time where server’s capacity is not proportionate.

- This is to ensure that the server with higher capacity should receive a higher number of connections.

Fastest Response Time

- This Load balancing algorithm works on the number of outstanding requests from the client to the server which are yet to respond.

- When a client sends SYN which gets load balanced to different servers, the load balancer keeps a record of the response time and forwards the subsequent requests to those servers only that responded the fastest.

- It is to be noted that it does not completely mean that a server that is responding the fastest will only get all the requests from the clients. The load balancer also keeps a record of active connections currently handling by a server and distributes traffic among those servers only having a smaller number of connections.

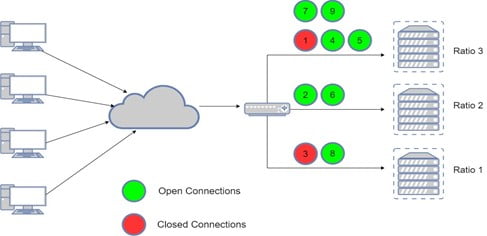

Observed

- The Observed load balancing method is a Ratio based load balancing, but the ratio is not defined by an administrator but by the load balancer itself dynamically.

- The ratio assigned to each member is based on the current connection count.

- If the connection count is unchanged, the system does not change the ratio value.

- Requests will be distributed to the servers with a lower connection count.

- Servers with lower connection counts will get the higher ratio and vice versa to better distribute the traffic equally among all servers.

- The ratio is defined based on the last connection counts within a period of time.

Predictive

- The Predictive mode dynamic load balancing algorithm is like observed mode and assigns dynamic ratio value based on the number of L4 connections to each pool member over time.

- With the Predictive methods, the Load balancer system analyzes the ranking over time, determining whether a node’s performance is currently improving or declining.

- The servers with performance rankings that are currently improving, rather than declining, receive a higher proportion of the connections.

| Frequency Seq.No | Server Name | No. of Connections | Ratio |

| 1 | Server 1 | 5 | 2 |

| Server 2 | 7 | 1 | |

| Server 3 | 2 | 3 | |

| 2 | Server 1 | 4 | 2 |

| Server 2 | 3 | 3 | |

| Server 3 | 7 | 1 |

Conclusion

In this blog, we have understood how load balancers can be used to tweak or manage traffic load from hundred and thousands of users without having any extra cost expenditure in buying more servers during the expansion of a network. We here at Zindagi Technologies with the experience of years understands the need and requirements of the clients to deliver the best possible solution when it comes to reduce cost and gain more in terms of deployments to serve as much possible as expected from any network infrastructure. We are looking forward to hearing from you for any further suggestions or support, please feel free to reach out to us at Zindagi Technologies or drop us a mail. You can also call us at +919773973971 and discuss your plans for the business.

Author

Rajat Goyal

Project Lead