What is QoS and Why It Is Important In Networking?

In this article, we are going to discuss QoS & its significances. We will also discuss how QoS works. QoS stands for Quality of Service which is used to prioritize the traffic and reduce the packet loss, latency and manage lower jitter in our network environment.

Before knowing how the user’s traffic gets prioritized while passing different types of packets such as data, voice & video traffic over a single link, we need to first understand the challenges that we were facing earlier without having QoS technology.

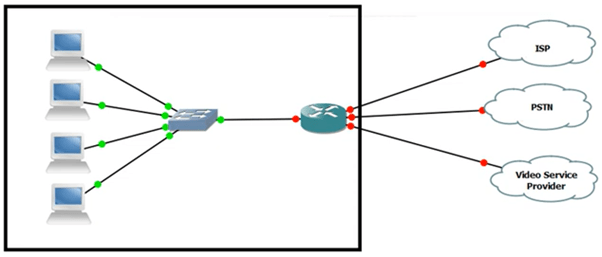

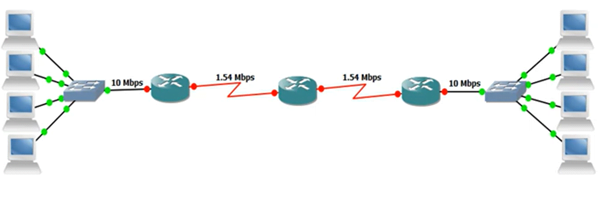

Traditional Network

In the above diagram, we can see that the user is required multiple connections for multiple services i.e. ISP link for Internet communication, PSTN link for voice traffic, and Video SP link for Video traffic. There might be some configuration overhead for sure.

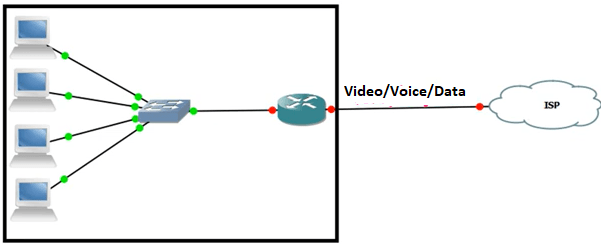

Converge Network

In this converged network, the user has required only a single link connecting to ISP for forwarding multiple traffic I.e. Data, Voice & Video traffic. But, there are some issues in a converged network that we will talk about.

Problems in Converge Network

The following are the issues in a converged network:

- Small voice traffic has to compete in a converged network

- Critical traffic must get priority

- Voice & Video traffic is delay sensitive/real-time

- Lack of Bandwidth

- Packet Loss

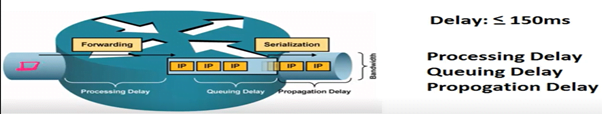

Small Voice Traffic Compete in a Converge Network

The size of normal voice traffic may vary from 17 Kbps to 106 Kbps which is comparatively very small. In a converged network, the small size voice traffic has to compete in converge network.

The standard delay for voice traffic is estimated as less than equal to 150 ms. This delay is taken by the router or switch to process & rewrite the traffic. The jitter (delay between two fragmented packets) must be less than equal to 30 ms and only 1 % packet loss is acceptable.

Critical Traffic Must Get Priority

Critical traffic is the traffic that should not be lost or dropped due to low bandwidth or queuing delay. If we take the example of call centers, we can easily understand that the telephonic conversation (voice traffic) and the server database (application traffic) are the critical traffic for them. Hence, this traffic must get priority over any traffic so that the business doesn’t get impacted.

Voice & Video Traffic are Delay Sensitive / Real-Time

Voice & Video traffic in real-time and delay-sensitive traffic that uses UDP protocol for traffic forwarding. Hence, there should not be any packet loss otherwise interruption may occur.

Lack of Bandwidth

In a converged network, when multiple types of traffic (voice/video/data) pass over a single link having low bandwidth, there might be congestion occurred. There is an option of purchasing a link with higher bandwidth, but it would be very costly.

Packet Loss

When we pass multiple types of traffic from a single link having low bandwidth, there might be some packet lost due to queuing delay on an exit interface. Hence, the user’s communication may impact.

To prevent the above-mentioned challenges in a converged network, we use QoS so that we can prioritize the critical or delay-sensitive traffic and have a smooth traffic flow even on the low bandwidth link.

Working Model of QoS

QoS works on three following models:

- Best effort model

- IntServ model

- DiffServ model

Best effort Model

In this model, no QoS is executed. The best-effort model is used by default. This model works on FIFO (First IN First OUT).

IntServ Model

IntSrv Model is an Integrated Service Model. It works per-flow with RSVP (Resource Reservation Protocol). Per-flow means, if an IP packet has the same Source IP, Destination IP, protocol, and Source & Destination port number. It is used by the service provider.

DiffServ Model

DiffServ Model is a differentiate Service Model. We use this QoS model in our converged network to prioritize the traffic. This model doesn’t work per flow. We just provide dedicated bandwidth to a specific type of traffic.

For example, we have a link of 1.54 Mbps on which multiple types of traffic have to pass (Voice/Video/Data). We can dedicatedly provide the bandwidth to the respective type of traffic I.e. Voice~ 0.70Mbps, Video~ 0.50Mbps, Data~30Mbps.

We have some QoS mechanisms while using DiffServ Model as below:

- Classification

- Marking

- Congestion Management

- Congestion Avoidance

- Policing

- Shaping

Classification

Classification is the process of identifying and categorizing traffic. We can classify the traffic on the basis of IP addresses and services. We use ACL & Class-map for classifying the traffic.

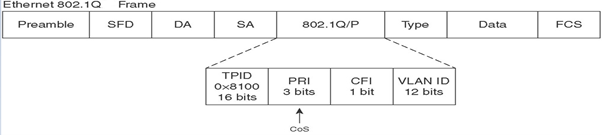

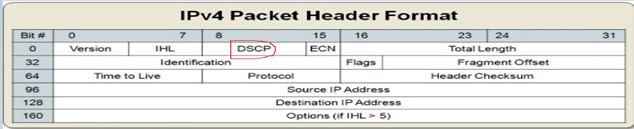

Marking

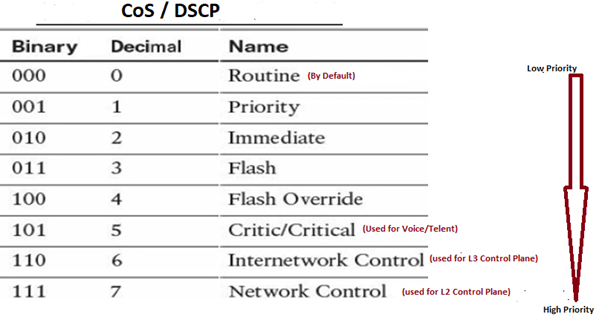

Marking is the QoS feature that ‘mark’ a packet so it can be identified and differentiate from other packets in QoS treatment. We use CoS field (3 bits in size) in Dot1q/ISL header at layer 2 and the IP Precedence/DSCP field (3 bits in size) at layer 3 for marking the traffic as per the prioritization.

CoS & DSCP are 3-bit fields that use the range of 0-7 decimal value to prioritize the traffic.

Some of the above-mentioned decimal values are reserved for a specific type of traffic I.e. ‘CoS 7’ is reserved for Layer 2 control plane traffic (CDP, DTP, etc.), ‘CoS 6’ is reserved for Layer 3 control plane traffic (OSPF, EIGRP, etc.), ‘CoS 5’ is reserved for Voice traffic& ‘CoS 0’ is the default value for data traffic which works on FIFO (First IN First OUT).

We can only prioritize the data traffic by using 1-4 decimal values. For example:

Data Traffic: Https (CoS 4)

SMTP (CoS 3)

FTP (CoS 2)

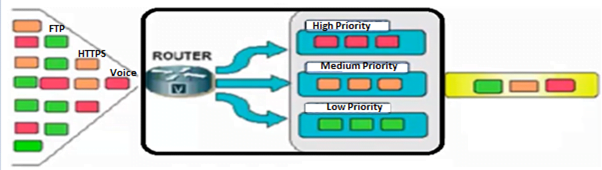

Congestion Management

In this section, we will discuss the following pointers:

– Reason for Congestion

– Queuing Process

Reason for Congestion: The possible reason for congestion would be when the multiple inbounds traffic is received on a device with having bandwidth more than the egress link bandwidth. All the inbound traffics gets buffered in a queue before exiting through the egress link due to low bandwidth.

Queuing Process: We use queuing process to avoid congestion on a link. We classify and mark the ingress traffic on the basis of the following priority queue:

– High Priority Queue

– Medium Priority Queue

– Low Priority Queue

Congestion Avoidance

In this section, we will discuss how to avoid congestion on an interface. The following methods are used:

– Tail drop

– Random Early Detection (RED)

– Waited Random Early Detection (WRED)

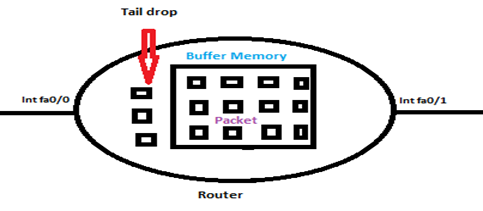

Tail drop: When the packets are getting dropped due to full of buffer queuing memory is called Tail drop.

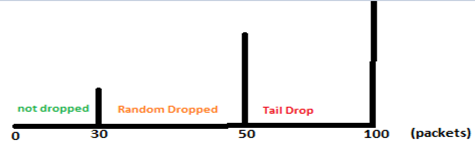

Random Early Detection (RED): In Random Early Detection, the packets are randomly dropped depending upon the capacity of buffer memory. It is used to prevent a tail drop.

Let suppose, the buffer memory capacity is 100 packets. Now, using RED mechanism, the starting 30 packets will not get dropped. But, if thepackets are exceeding from 30 –50 packets then the 20 packets will be dropped randomly so that there will not be any tail dropping. If the packets are exceeding from 50-100 then tail drop will occur.

Waited Random Early Detection (WRED): In this process, the packets are not dropped randomly. The packets will be dropped with lower priority.

Policing: It is used to enforce the rate limit for a specific type of traffic (i,e. Https traffic, Voice traffic, Video traffic) by dropping down the packet.

For example: Let suppose we have enforced the rate limit of 1Mbps for https traffic then if the https traffic will be more than 1Mbps, then it will be dropped.

Shaping: It is used to enforce the rate limit for a specific type of traffic by delaying packets using buffers.

In this article, we have learned the basic requirement of QoS in our network environment. We went through the different types of QoS models & their working mechanisms. We also discussed the QoS marking at Layer 2 & Layer 3 in the ‘CoS’& ‘DSCP’ fields. The QoS configuration part will be covered in the next blog. You can reach out to us at https://zindagitech.com/

Author

Sani Singh

Consultant – Enterprise Networking